Twitter recently announced that it will no longer allow “the sharing of private media, such as images or videos of private individuals without their consent”. The move takes effect through an expansion of the social media platform’s private information and media policy.

In practical terms, this means photos and videos can be removed if the photographer has not obtained consent from people captured prior to sharing the item on Twitter. Individuals who find their image shared online without consent can report the post, and Twitter will then decide whether it is to be taken down.

According to Twitter, this change comes in response to “growing concerns about the misuse of media and information that is not available elsewhere online as a tool to harass, intimidate and reveal the identities of individuals”.

While the move signals a shift towards greater protection of individual privacy, there are questions around implementation and enforcement.

Beginning today, we will not allow the sharing of private media, such as images or videos of private individuals without their consent. Publishing people's private info is also prohibited under the policy, as is threatening or incentivizing others to do so.https://t.co/7EXvXdwegG

— Twitter Safety (@TwitterSafety) November 30, 2021

In contrast to some European countries – France, for example, has a strong privacy culture around image rights under Article 9 of the French Civil Code – the UK does not have such a strong tradition of image rights.

This means there is little an individual can do to prevent an image of themselves being circulated freely online, unless it is deemed to fall within limited legal protection. For example, in relevant circumstances, an individual may be protected under section 33 of the United Kingdom’s Criminal Justice and Courts Act 2015, which addresses image-based sexual abuse. Legal protection may also be available if the image is deemed to contravene copyright or data protection provisions.

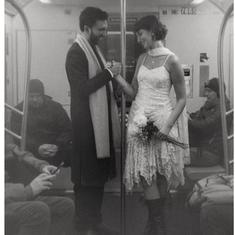

On the one hand, the freedom to photograph is fiercely defended, largely by the media and photographers. On the other, private, unwanted or humiliating photographs can cause significant upset and distress, with a clash of rights between the photographer (the legal owner of the photograph) and the photographed (often laying no claim to the image).

My research

For my PhD, I surveyed 189 adults living in England and Wales on their experiences with images shared online, particularly via social media. My findings were published earlier this year.

Although some participants were not bothered or were even pleased to come across images of themselves shared online, for others, finding images which they did not consent to being posted made them feel uneasy. As one participant said: “Fortunately I did not look too bad and was not doing anything stupid, but I would prefer to control images of myself appearing in public.”

Another said: “I was quite angry about having my image shared on social media without my permission.”

A narrow majority of respondents supported an increase in legal protection of individual rights to mean that their image could not be used without consent (55% agreed, 27% were not sure and 18% disagreed). Meanwhile, 75% of respondents felt social media sites should play a greater role in protecting privacy.

I found people were not necessarily seeking legal protection in this regard. Many were just looking for some kind of avenue, such as it being the norm for photographs posted on social media without permission to be removed at the request of the person photographed.

Twitter’s policy change represents a pragmatic solution, giving individuals greater control over how their image is used. This may be helpful, particularly from a safety perspective, to the groups Twitter has identified, which include women, activists, dissidents and members of minority communities. For example, an image that reveals the location of a woman who has escaped domestic violence could put that person in significant danger if the image is seen by her abuser.

It also may be helpful to children subject to “sharenting” – having images shared online by their parents at every stage of growing up. In theory, these children can now report these images once they are old enough to understand how. Alternatively, they can have a representative do this for them.

Teething problems

The change has understandably caused some consternation, particularly among photographers. Civil liberties group Big Brother Watch has criticised the policy for being “overly broad”, arguing it will lead to censorship online.

It is important to note that this is not a blanket ban on images of individuals. Twitter has said images or videos that show people participating in public events (such as large protests or sporting events) generally would not violate the policy.

They also draw attention to a number of exceptions, including where the image is newsworthy, in the public interest, or where the subject is a public figure. But how the public interest will be interpreted would benefit from greater clarity. Similarly, how this policy will apply to the media is not entirely clear.

There were already a few teething problems within a week of the policy launching. Co-ordinated reports by extremist groups pertaining to photos of themselves at hate rallies reportedly resulted in numerous Twitter accounts belonging to anti-extremism researchers and journalists being suspended by mistake. Twitter said it has corrected the errors and launched an internal review.

There are also concerns that minority groups may be harmed by the policy if they run into difficulties sharing images highlighting abuse or injustice, such as police brutality. Although Twitter has said that such events would be exempted from the rule on the grounds of being newsworthy, how this will be enforced is not yet clear.

There are certain issues that need to be ironed out. But ultimately, this policy change does have the potential to protect individual privacy and facilitate a more considered approach to the sharing of images.

Holly Hancock is a Lecturer in Tort Law at the University of East Anglia.

This article first appeared on The Conversation.